It's all about the data, stupid!

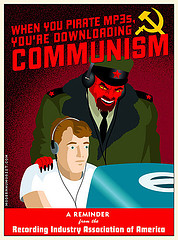

Electronic evidence and data gathering are becoming an important part of modern litigation. When Viacom requested data from Google pertaining their massive litigation, they did not just ask for all the usage information related to their own content, they requested everything. All information about every single video uploaded to YouTube ever, and they got it, together with all removed videos. That is a lot of information (reportedly, the user database itself is 12TB worth). Now some poor sod at Viacom is going to have to go through that...

Electronic evidence and data gathering are becoming an important part of modern litigation. When Viacom requested data from Google pertaining their massive litigation, they did not just ask for all the usage information related to their own content, they requested everything. All information about every single video uploaded to YouTube ever, and they got it, together with all removed videos. That is a lot of information (reportedly, the user database itself is 12TB worth). Now some poor sod at Viacom is going to have to go through that...

Welcome to the Terabyte age: gazillions of data stored in servers all over the world. What is useful and what is not? Is this even a relevant question any more? Chris Anderson, of Wired and Long Tail fame, thinks that the deluge of data heralds the end of theory as we know it. All of our preconceptions should be re-written in order to cope with an increase in storage and processing power that will not peak any time soon.

He uses Google as the typical example of where things are headed when you have seemingly unlimited storage space. Google gathers 1 Petabyte of information every 72 minutes, an amount so humongously large, so mind-bogglingly huge, that all comparisons break down. No individual can sift through that amount of data to find useful information about it, so Google relies on "intelligent" sorting software in order to go through the data and answer questions about it. Google then doesn't need to have people reading web pages in order to know what they contain, all it needs is indexing and sorting software that will correlate one set of data with another. So, Google "knows" that this blog is probably related to some vague legal issues not because they have read the small blurb on the blog, or because I classified it as such, but because there is a high incidence of certain words, as well as the tags I use. This also allows the reasonably accurate allocation of AdWords and other paid search material.

All of the wealth of data means that we are finding it more and more difficult to write theories that account for all of the stored facts. Physics has become a statistical science where cats can be alive and dead at the same time. Biology has become a statistical science of billions of combinations of DNA. Our theories are simply not general enough to describe reality any more, only statistical approximations of reality. Anderson claims that all of the above heralds the end of theory:

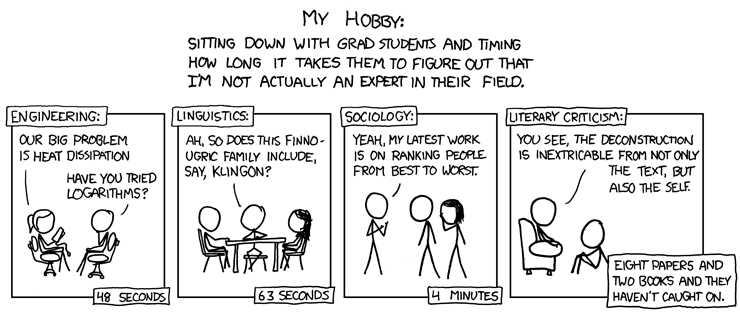

"Petabytes allow us to say: "Correlation is enough." We can stop looking for models. We can analyze the data without hypotheses about what it might show. We can throw the numbers into the biggest computing clusters the world has ever seen and let statistical algorithms find patterns where science cannot."This may sound awfully reductionist. Can we forget about analysis, and simply look for correlation about everything? If it works, why not? Think of how we study the law. Most of our legal theories work on the assumption that human beings are rational individuals acting on the pursuit of social/selfish/rational goals and interests. But what if what we are is simply a collection of responses that can be mapped and correlated at statistical level? We are increasingly leaving trace of how we think, not on what we write, but on what we click.

It is my reductionist claim that once we start going through the average clicking path of a person through a day, we will discover some unsavoury truths about our own nature.

del.icio.us

del.icio.us